To highly technical audiences, it is unsurprising that Telegram is not cryptographically secure and that Signal is much better.

Numerous security experts have pointed out that Telegram’s reputation as a secure messenger is primarily a result of its marketing rather than its technical architecture.

Unfortunately, for less technical audiences, Telegram appears to be encrypted. Ars Technica, for example, published an article branding Telegram as an “encrypted messaging app” [archived version].

Telegram encryption

Yes, Telegram is encrypted, but only in transit, using HTTPS and other transport-layer security mechanisms. This is a standard level of security that almost all chat applications possess, including Discord or Facebook Messenger.

Default Telegram chats are not End-To-End encrypted. End-To-End encryption means your messages leave your device encrypted and arrive at the recipient’s device encrypted. The recipient’s device then has to apply cryptography to obtain the clear-text message from the encrypted form it has received.

Yes, Telegram offers End-To-End encryption, but only as an optional feature called “secret chats.” Standard chats are not End-To-End encrypted, and channels and groups are never encrypted.

Generally speaking, End-To-End cryptography is the current state of the art in secure communication platforms. Signal, Threema, and, more famously, WhatsApp all use these End-To-End encryption capabilities, sometimes abbreviated as E2EE.

Taking all this information at face value, it would be easy to conclude that Signal is more secure than Telegram. However, in this post, I’d like to argue why End-To-End encryption is only one of many factors for a security-focused messaging application.

What is encryption?

The point of this post is to call attention to the obsession of some Internet dwellers with regard to encryption.

Encryption can be categorised in two broad groups:

- At rest

- In transit

Sometimes, applications and/or systems can combine both kinds of encryption.

What is discussed above regarding E2EE falls under the category of “in transit” encryption. In-transit means the network packets leaving a given device are encoded in a way that if you were able to read those packets, by means of a “Man in the Middle” attack, you wouldn’t be able to know what they contain.

Regarding in-transit encryption, there again exist two broad categories:

- Client-to-Server

- Client-to-Client / Peer-to-Peer / End-To-End

Client-to-server encryption occurs when the client sends a network packet to a server in a way the server can decrypt. This is the most common application of in-transit encryption, and while it does indeed prevent Man-in-the-Middle attacks, it doesn’t prevent other types of attacks involving rogue insiders or gag orders.

On the contrary, End-To-End encryption uses cryptography in a way that the receiving server cannot decrypt the message. The server does receive some metadata like sender, destination, timestamp, a checksum, message size, and other details for the message, but the actual message data is encrypted and unreadable to the server.

Furthermore, when an application uses End-To-End encryption, it is good practice to also add Client-to-Server encryption. This is because, as mentioned above, the End-To-End encrypted message has metadata associated with it.

In the case of Telegram, while Telegram encrypts messages in transit using HTTPS and its own MTProto protocol, only Secret Chats are End-To-End encrypted, which exists as an optional feature. Regular chats, including groups and channels, are stored unencrypted on Telegram’s servers and can be accessed by Telegram itself. On threat models As I mentioned, encryption is a highly desirable feature, but it is crucial to understand what we want to protect ourselves from.

This is called “threat modelling”. It is unique to each individual and therefore it is difficult to generalise the design and implementation of threat modelling.

For me, it’s straightforward. I can be up to five different people at any given time, depending on my environment.

I’m a different person depending on whether I am with:

- My coworkers, at work

- My family

- My non-furry friends

- My furry friends

- My partner

And for me (again, this is a very personal matter that’s up to each of us to decide), it is important to keep all these aspects of my life separated and compartmentalised. It may not be important to you, and you might think mixing work and family is fine, and that’s a decision you have the power to make.

I’m concerned with:

- My neighbour learning about my web browsing habits.

- My ISP knowing what kinds of websites I visit.

- My ISP, Google, AWS, or other service providers being able to read my messages.

- My non-furry life and my furry life mixing

- My family and non-furry friends learning the kind of pornography I watch.

- My messaging application of choice knowing my real name or my phone number (which by law, in many countries, is associated with your real name and government-issued ID).

- An unauthorised third-party attacker being able to see my network packets and obtain my messages.

I’m not concerned with:

- The NSA / FBI / CIA spying on me

- Cookies installed in my browser

- Proprietary JavaScript running in my browser when I visit any website

- AWS / Cloudflare / Google knowing where I’m located geographically

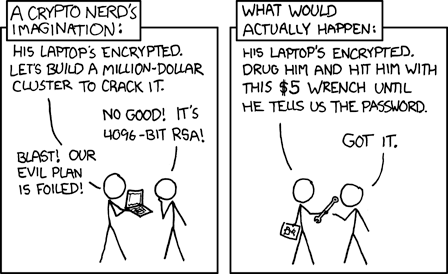

End-To-End encryption protects against passive Man-in-the-Middle attacks and against employees with access to the servers, a law enforcement agency seizing the hard disks from a server, and even a sophisticated infrastructure hack letting a third party access the server’s storage illegally. However, beyond that, there’s little End-To-End encryption protects against. In specific scenarios and threat models, this is important. In my case (and possibly yours too), End-To-End encryption is less important than other factors.

It’s not that I don’t care about End-To-End encryption; it’s more that all security measures come with a tradeoff in usability. And my threat model doesn’t at all benefit from this additional layer of End-To-End encryption.

XKCD strip: https://xkcd.com/538/

Privacy and security

Phone number registration

Many apps, including Signal, WhatsApp, and Telegram, require your phone number in order to be able to sign up and use the application. I think this is a gross privacy violation for many reasons, among which, as I mentioned on my OPSEC post, many countries require your ID to be recorded against your phone number. This means that, if at any time, your phone number and real name is leaked due to a hack, or any other reason, the privacy of your messaging account is compromised, as anyone can link your account with your real name.

Contact matching

Again, one of the biggest sins of messaging applications is that they upload your entire contact book to their servers so they can match your phone numbers against their database. This also gives the server the full contact name and phone number of every contact on your phone, which means that, even if you choose never to use an app, the app most likely knows your real name and phone number because a contact of yours shared this information with them without your knowledge or approval.

Telegram and Signal are both guilty of this.

Multiple accounts

Now, this might seem like a strange thing to point out. It is possible that many people see multiple accounts as merely a UX issue. As a convenient feature to have, but possibly one that few people make use of. Nothing could be further from the truth: Having multiple accounts is a fundamental security and privacy feature for many groups of people.

This is really what privacy is about. It’s not about having “things to hide”. It’s about empowering every person to reveal themselves when and how they wish. Some people are fine being the same person in all environments. They keep few secrets. Other people, for example, like to have separation between work and home.

It’s up to each one of us to decide where to draw the line, and being able to use multiple accounts helps to achieve this goal.

Most famously, Signal does not allow multiple accounts. In fact, Signal doesn’t even allow you to have Signal installed on several smartphones. Telegram allows multiple accounts in one phone, as well as multiple phones logged into the same account.

Local storage encryption

This is important for situations where physical access matters. Most typically, in airport security or when crossing borders. These are very high-stakes situations and you can’t easily refuse a phone search without risking detention or immediate deportation. Although local storage encryption is not a silver bullet, it does help for situations where they request you unlock your device, and they make a copy of your smartphone’s storage. If they’re particularly interested in reading your conversations, they could additionally request you to provide the password to unlock the chat application and its local storage.

While neither Telegram nor Signal offer this by default, a third-party Signal fork called Molly does allow you to set a passcode to encrypt your applications’ contents on-device.

Plausible deniability

Plausible deniability comes in many forms. One is privacy in the form of others not being able to find your account by searching with your phone number or email. Another is hidden or disguised application icons so nobody can immediately tell what messaging application you’re using.

Telegram generally doesn’t offer this, although there are third-party applications that allow you to change the icon (but not the name of the app). Signal does offer you to disguise the Signal app as a Weather app, for example.

Other factors to keep in mind

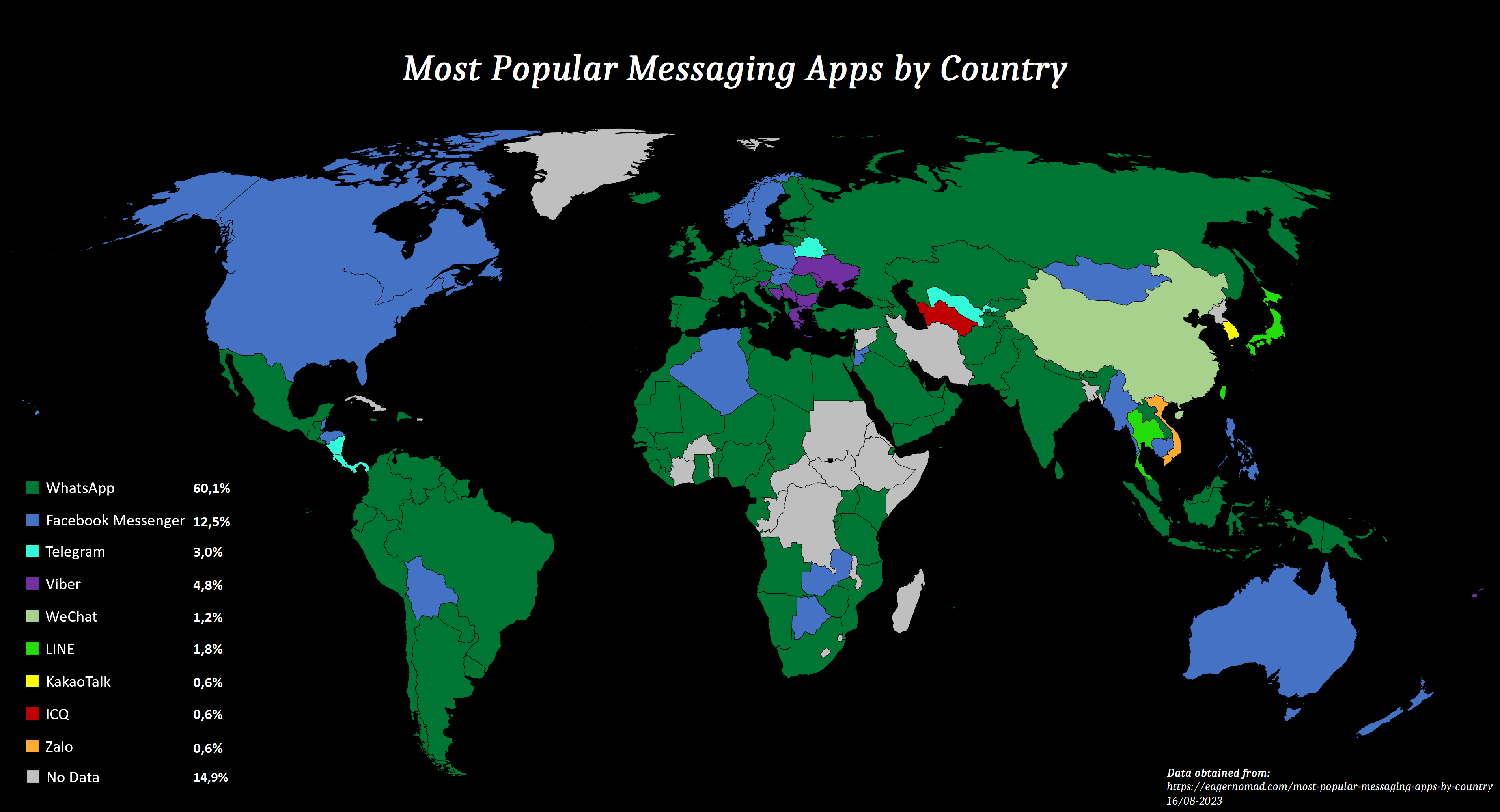

Popularity

It might seem strange to bring up a “popularity” point. How could that possibly affect privacy?

WhatsApp is an extremely popular End-To-End encrypted chat application. And by far the most used. Having WhatsApp installed on your phone does not raise any suspicions if anyone was to go through your phone’s installed apps, which could happen, for example, when crossing an international border.

Signal, on the other hand, doesn’t even show up in most rankings[archived version], which is terrible news if anyone spots the application on your phone and asks you who you communicate with.

In many countries, using Signal, is akin to painting a target on your back, while WhatsApp’s popularity is hardly going to raise any eyebrows.

Source model (is it open source?)

This one seems simple to answer. Signal is fully open source, including, critically, the server. Telegram, on the other hand, only open sources their clients. There exists a third-party reverse-engineered Telegram server called Teamgram, which is certainly an impressive feat, given the complexity of the MTProto Protocol [archived version], and considering there exists no documentation or protocol description other than this. Teamgram however is hardly fully-featured, does not federate, which means you cannot talk to Telegram users using the official servers, and isn’t really official which still leaves the official Telegram server unauditable.

However, Signal does not fully embrace the spirit of open source and libre software either. Moxie has previously voiced concerns [archived version] with third-party Signal forks accessing the signal network. This is highly suspicious and questionable for a supposedly open-source application. Telegram does sometimes ban third-party clients, especially those that break Telegram’s terms of service. Still, Telegram allows hundreds of forks and third-party apps to access the Telegram network, albeit they have recently started implementing restrictions such as disallowing third-party applications from using SMS authentication or allowing users to sign up with third-party apps:

Starting on 18.02.2023, users logging into third-party apps will only be able to receive login codes via Telegram. It will no longer be possible to request an SMS to log into your app – just like when logging into Telegram’s own desktop and web clients.

See more here [archived version].

Connection architecture (is it P2P or client-server?)

Generally speaking, most chat applications are client-server. These include Telegram, WhatsApp, Discord, IRC, Skype, and many others.

Client-server architecture means that you run a piece of software in your devices (called a client), which communicates with devices owned and operated by the company that provides this application.

This has inherent risks but it also makes development much easier, and cheaper.

Peer-to-peer is more secure, but client-server is more reliable.

Finances

The trust of any application, regardless of their source model, can be quickly and easily breached by inadequate financing or a lack of transparency. Would you trust your deepest secrets to an application that relies on community donations to keep up with development costs and hosting?

The forceful push from Telegram and Discord into paid features is certainly alienating, but Telegram is highly profitable [archived version], which effectively allows Telegram to operate independently, with a lower risk of bribes, blackmail, or other kinds of third-party interference.

Signal, on the other hand, relies on MobileCoin [archived version], a cryptocurrency ponzi scheme, which certainly doesn’t inspire confidence in the financial status of Signal. Signal also accepts donations [archived version], which can make it easy for parties with large amounts of cash (like a government) to influence the direction of Signal.

Another example, Threema, offers the app for personal use for a small one-time payment, and also offers Work, and onprem, which are paid versions for business and governments that offer higher security standards.

Can it be self-hosted?

For client-server applications, hosting the server part on your own hardware can give you more control and security guarantees. In some cases, you can also keep your server private with no outside communication other than its users, creating gated communities.

As explained above, it is possible to host Signal server, but then you will not be able to message users on the official Signal server. A similar situation happens with Telegram and the third-party Teamgram fork.

Other chat applications that can be self hosted include Matrix and XMPP, however, these options are far from perfect.

The human factor

It doesn’t matter how secure a computer system is. Humans are always the weakest link in the chain. This applies to everything, and it applies especially to chat applications. Making group chats in encrypted applications but leaving them open for anyone to join makes the encryption fairly useless. Any encrypted group chat would require that all members verify each other, which becomes impractical quickly.

No application, and no software can mitigate the human factor.

Self-destructing messages

Many chat applications, like Telegram and Signal, offer self-destructing messages. The problem with this is that it relies on the other party’s goodwill. Even if Android or iOS are prevented from taking screenshots of the application’s contents at the OS level, once the data leaves your device, it is no longer yours, and it can be retrieved, regardless of how difficult it may be.

For Telegram, Ayugram exists. It is a third-party chat application that violates the terms of service in a number of ways, including not respecting remote deletion and message expiration timers.

Therefore, you should only use self-destructing messages with people you already trust, and you want to prevent both parties from keeping messages for longer than necessary. You must never rely on self-destructing messages to keep information from the other party.

Other Signal criticisms: https://sequoia-pgp.org/blog/2021/06/28/202106-hey-signal-great-encryption-needs-great-authentication/